This blog explains the real time installation of RAC on Solaris 10 with Openfiler 2.3

Solaris Servers are configured as follows:

| Machine Name | Instance Name | Database | Oracle Base | Filesystem/Vol Manager |

| RAC1 | orcl1 | orcl | /export/home/rac | ASM |

| RAC2 | orcl2 | orcl | /export/home/rac | ASM |

| Filetype | File Name | iSCSI | Mount Point | File System |

| OCR | /dev/rdsk/c2t2d0s1 | OCR | RAW | |

| CRS | /dev/rdsk/c2t3d0s1 | CRS | RAW |

IP Details are follows:

RAC1

| Device | IPAddress | Subnet | Gateway | Purpose | Name of Interface |

| rge0 | 192.168.3.50 | 255.255.252.0 | 192.168.0.102 | Public IP | RAC1 |

| rtls0 | 192.168.10.10 | 255.255.255.0 | Private IP | RAC1-Priv | |

| 192.168.3.55 | 255.255.255.0 | Virtual IP | RAC1-VIP |

RAC2

| Device | IPAddress | Subnet | Gateway | Purpose | Name of Interface |

| rge0 | 192.168.3.51 | 255.255.252.0 | 192.168.0.102 | Public IP | RAC2 |

| rtls0 | 192.168.10.11 | 255.255.255.0 | Private IP | RAC2-Priv | |

| 192.168.3.56 | 255.255.255.0 | Virtual IP | RAC2-VIP |

Openfiler IP

| Device | IPAddress | Subnet | Gateway | Purpose | Name of Interface |

| eth0 | 192.168.3.52 | 255.255.252.0 | 192.168.0.102 | Public IP | openfiler |

| eth1 | 192.168.10.12 | 255.255.255.0 | Private IP | openfiler-priv |

Install the Solaris Operating System

Perform the following installation on both Oracle RAC nodes in the cluster.

This section provides a summary of the screens used to install the Solaris operating system. This guide is designed to work with the Solaris 10 operating environment.

The ISO images for Solaris 10 x86 can be downloaded from here. You will need a Sun Online account to access the downloads but registration is free and painless.

There are some basic system requirements that need to be met before Solaris 10 can be installed. Note that the minimum requirements listed below are recommended minimums.

- Minimum 256 MB of physical RAM

- Minimum 5 GB of available hard drive space

- Minimum 400 MHz CPU speed

- DVD or CD-ROM drive

- Attached monitor or integrated display

For more information on Solaris requirements, visit here.

After downloading and burning the Solaris 10 OS images to CD, power up the first server (solaris1 in this example) and insert Solaris 10 disk #1 into the drive tray. Follow the installation prompts as noted below. After completing the Solaris installation on the first node, perform the same Solaris installation on the second node.

Boot Screen

The Solaris 10 OS install and boot process is based on the GNU GRUB loader. The first screen will be the GRUB boot screen. Since this example is based on x86 hardware, select the first entry on this screen as this console coreesponds to the hardware version (x86) on which the Solaris OS is being installed.

Selecting the Type of Installation

After the enter key is pressed, the following message is displayed:

Booting 'Solaris'

kernel /boot/multiboot kernel/unix -B install_media=cdrom

Multiboot-elf...

Once the first modules are loaded, six installation options are presented.

SunOS Release 5.10 Version Generic_Patch 32-bit

Copyright 1983-2005 Sun Microsystems, Inc. All rights reserved.

Use is subject to license terms.

Configuring devices.

1. Solaris Interactive (default)

2. Custom JumpStart

3. Solaris Interactive Text (Desktop session)

4. Solaris Interactive Text (console session)

5. Apply driver updates

6. Single user shell

Automatically continuing in xx seconds

(timeout)

In this example, we will be choosing the first option (Solaris Interactive). After selecting this option, the following output is displayed (this will differ depending on your hardware).

Solaris Interactive

Using install cd in /dev/dsk/c1t0d0p0

Using RPC Bootparams for network configuration information.

Attempting to configure interface e1000g0...

Skipped interface e1000g0...

Attempting to configure interface rge0...

Skipped interface rge0...

Beginning system identification...

Searching for configuration files(s)...

Search complete.

Proposed Window System Configuration for Installation:

Video Devices: xxxxxxxxxxxxxxx

Video Drivers: xxxxxxxxxxxxxxx

Resolution/Colors: xxxxxxxxxxxxxxx

Screen Size: xxxxxxxxxxxxxxx

Monitor Type: xxxxxxxxxxxxxxx

Keyboard Type: xxxxxxxxxxxxxxx

Pointing Device: xxxxxxxxxxxxxxx

Press to accept proposed configuration

or to change proposed configuration

or to pause

<<< timeout in 30 seconds>>>

After the ENTER key is pressed, the system switches to graphics mode and continues with the next section of the installation unless the ESC key is pressed.

System Configuration

A series of screens guide you through the configuration process. Make the appropriate choices for your language in the first window. The welcome screen will then appear as shown.

Figure 2 Solaris Welcome Screen

Click [Next] to continue the installation process.

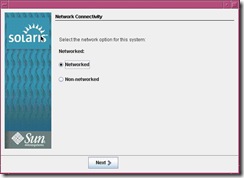

The network connectivity screen then appears. Ensure that the Networked button is enabled as shown below. In the screenshots that follow, the network interfaces are pcn0 and pcn1. This is because these screenshots were taken during a different install. Obviously, during your install (if using the listed hardware), your network interfaces should show up as rge0 and e1000g0.

Figure 3 Solaris Network Connectivity Screen

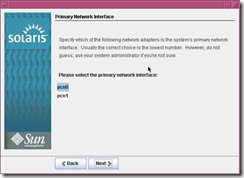

Since multiple network interfaces are present on this machine, a screen will appear asking you to select all of the network interfaces you want to configure.

In this article, we will select both interfaces as want to configure both. Click [Next] after selecting both network interfaces. In the next screen, we specify which of the network interfaces is the primary interface (shown in Figure 5). For this article, we will be using rge0 as the primary interface.

Figure 5 Selecting the Primary Interface

Specify not to use DHCP as shown in Figure 6 and enter your network information when prompted for it. Since we will be using two NIC interfaces, we will have to fill out the prompts for both interfaces. The interfaces will be configured one after the other.

Adjusting Network Settings

The UDP (User Datagram Protocol) settings affect cluster interconnect transmissions. If the buffers set by these parameters are too small, then incoming UDP datagrams can be dropped due to insufficient space, which requires send-side retransmission. This can result in poor cluster performance.

On Solaris, the UDP parameters are udp_recv_hiwat and udp_xmit_hiwat. The default values for these paramters on Solaris 10 are 57344 bytes. Oracle recommends that you set these parameters to at least 65536 bytes.

To see what these parameters are currently set to, enter the following commands:

# ndd /dev/udp udp_xmit_hiwat

57344

# ndd /dev/udp udp_recv_hiwat

57344

To set the values of these parameters to 65536 bytes in current memory, enter the following commands:

# ndd -set /dev/udp udp_xmit_hiwat 65536

# ndd -set /dev/udp udp_recv_hiwat 65536

Now we want these parameters to be set to these values when the system boots. The official Oracle documentation is incorrect when it states that when you set the values of these parameters in the /etc/system file, they are set on boot. These values in /etc/system will have no effect for Solaris 10. Please see Bug 5237047 for more information.

So we need to write a startup script udp_rac in /etc/init.d with the following contents:

#!/sbin/sh

case "$1" in

'start')

ndd -set /dev/udp udp_xmit_hiwat 65536

ndd -set /dev/udp udp_recv_hiwat 65536

;;

'state')

ndd /dev/udp udp_xmit_hiwat

ndd /dev/udp udp_recv_hiwat

;;

*)

echo "Usage: $0 { start | state }"exit 1

;;

esac

We now need to create a link to this script in the /etc/rc3.d directory.

# ln -s /etc/init.d/udp_rac /etc/rc3.d/S86udp_rac

Install Openfiler

Perform the following installation on the network storage server (openfiler1)

With the network configured on both Oracle RAC nodes, the next step is to install the Openfiler software to the network storage server (openfiler1). Later in this article, the network storage server will be configured as an iSCSI storage device for all Oracle RAC 10g shared storage requirements.

Download Openfiler

Use the links below to download Openfiler 2.1 x86 (Final). After downloading Openfiler, you will then need to burn the ISO image to CD.

Install Openfiler

This section provides a summary of the screens used to install the Openfiler software. For the purpose of this article, I opted to install Openfiler with all default options. The only manual change required was for configuring local network settings.

Once the install has completed, the server will reboot to make sure all required components, services and drivers are started and recognized. After the reboot, the external Maxtor hard drive should be discovered by the Openfiler server as the device /dev/sda.

After downloading and burning the Openfiler ISO image to CD, insert the CD into the network storage server (openfiler in this example), power it on, and answer the installation prompts as noted below.

Boot Screen

The first screen is the Openfiler boot screen. At the boot: prompt, hit [Enter] to start the installation process.

Media Test

When asked to test the CD media, tab over to [Skip] and hit [Enter]. If there were any errors, the media burning software would have warned us. After several seconds, the installer should then detect the video card, monitor, and mouse. The installer then goes into GUI mode.

Welcome to Openfiler NAS/SAN Appliance

At the welcome screen, click [Next] to continue.

Keyboard Configuration

The next screen prompts you for the keyboard settings. Make the appropriate selection for your configuration.

Disk Partitioning Setup

The next screen asks whether to perform disk partitioning using 'Automatic Partitioning" or "Manual Partitioning with Disk Druid". You can choose either method here, although the official Openfiler documentation suggests to use Manual Partitioning. Since the internal hard drive I will be using for this install is small and only going to be used to store the Openfiler software, I opted to use 'Automatic Partitioning".

Select [Automatically Partition] and click [Next] to continue.

Partitioning

The installer will then allow you to view (and modify if needed) the disk partitions it automatically selected for /dev/hda. In almost all cases, the installer will choose 100MB for /boot, double the amount of RAM for swap, and the rest going to the root (/) partition.

Network Configuration

Make sure that the network device is checked to [Active on Boot].

Now, [Edit] rge0 as follows. rge0 must be configured to be on the same subnet as the private interconnect of the two RAC nodes.

rge0:

- Host Name: openfiler

- IP Address: 192.168.3.53

- Netmask: 255.255.255.0

Continue by setting your hostname manually. I used a hostname of openfiler.

Time Zone Selection

The next screen allows you to configure your time zone information. Make the appropriate selection for your location.

Set Root Password

Select a root password and click [Next] to continue.

About to Install

This screen is basically a confirmation screen. Click [Next] to start the installation.

Finished

Openfiler is now installed. The installer will eject the CD from the CD-ROM drive. Take out the CD and click [Reboot] to reboot the system.

If everything was successful after the reboot, you should now be presented with a text login screen and the URL to use for administering the Openfiler server.

Configure iSCSI Volumes using Openfiler

Perform the following configuration tasks on the network storage server (openfiler)

Openfiler administration is performed using the Openfiler Storage Control Center - a browser based tool over an https connection on port 446. In this article the Openfiler Storage Control Center will have to be accessed from one of the Oracle RAC nodes since we did not configure a public interface for openfiler. For example:

https://openfiler:446/

From the Openfiler Storage Control Center home page, login as an administrator. The default administration login credentials for Openfiler are:

- Username: openfiler

- Password: password

The first page the administrator sees is the [Accounts]/[Authentication] screen. Configuring user accounts and groups is not necessary for this article and will therefore not be discussed.

To use the Openfiler as an iSCSI storage server, we have to perform three major tasks; set up iSCSI services, configure network access, and create physical

storage.

Services

To control services, we use the Openfiler Storage Control Center and navigate to [Services] / [Manage Services]:

Figure: Enable iSCSI Openfiler Service

To enable the iSCSI service, click on the 'Enable' link under the 'iSCSI target server' service name. After that, the 'iSCSI target server' status should change to 'Enabled'.

The ietd program implements the user level part of iSCSI Enterprise Target software for building an iSCSI storage system on Linux. With the iSCSI target enabled, we should be able to SSH into the Openfiler server and see the iscsi-target service running:

[root@openfiler1 ~]# service iscsi-target status

ietd (pid 14243) is running...

The next step is to configure network access in Openfiler to identify both Oracle RAC nodes (RAC1 and RAC2) that will need to access the iSCSI volumes through the storage (private) network. Note that iSCSI volumes will be created later on in this section. Also note that this step does not actually grant the appropriate permissions to the iSCSI volumes required by both Oracle RAC nodes. That will be accomplished later in this section by updating the ACL for each new logical volume.

As in the previous section, configuring network access is accomplished using the Openfiler Storage Control Center by navigating to [System] / [Network Setup]. The "Network Access Configuration" section (at the bottom of the page) allows an administrator to setup networks and/or hosts that will be allowed to access resources exported by the Openfiler appliance. For the purpose of this article, we will want to add both Oracle RAC nodes individually rather than allowing the entire 192.168.10.0 network have access to Openfiler resources.

When entering each of the Oracle RAC nodes, note that the 'Name' field is just a logical name used for reference only. As a convention when entering nodes, I simply use the node name defined for that IP address. Next, when entering the actual node in the 'Network/Host' field, always use its IP address even though its host name may already be defined in your /etc/hosts file or DNS. Lastly, when entering actual hosts in our Class C network, use a subnet mask of 255.255.255.255.

It is important to remember that you will be entering the IP address of the private network (eth1) for each of the RAC nodes in the cluster.

The following image shows the results of adding both Oracle RAC nodes:

Physical Storage

In this section, we will be creating the five iSCSI volumes to be used as shared storage by both of the Oracle RAC nodes in the cluster. This involves multiple steps that will be performed on the internal 73GB 15K SCSI hard disk connected to the Openfiler server.

Storage devices like internal IDE/SATA/SCSI/SAS disks, storage arrays, external USB drives, external FireWire drives, or ANY other storage can be connected to the Openfiler server and served to the clients. Once these devices are discovered at the OS level, Openfiler Storage Control Center can be used to set up and manage all of that storage.

In our case, we have a 73GB internal SCSI hard drive for our shared storage needs. On the Openfiler server this drive is seen as /dev/sdb (MAXTOR ATLAS15K2_73SCA). To see this and to start the process of creating our iSCSI volumes, navigate to [Volumes] / [Block Devices] from the Openfiler Storage Control Center:

Partitioning the Physical Disk

The first step we will perform is to create a single primary partition on the /dev/sdb internal hard disk. By clicking on the /dev/sdb link, we are presented with the options to 'Edit' or 'Create' a partition. Since we will be creating a single primary partition that spans the entire disk, most of the options can be left to their default setting where the only modification would be to change the 'Partition Type' from 'Extended partition' to 'Physical volume'. Here are the values I specified to create the primary partition on /dev/sdb:

Mode: Primary

Partition Type: Physical volume

Starting Cylinder: 1

Ending Cylinder: 8924

The size now shows 68.36 GB. To accept that we click on the "Create" button. This results in a new partition (/dev/sdb1) on our internal hard disk:

Figure 9: Partition the Physical Volume

Volume Group Management

The next step is to create a Volume Group. We will be creating a single volume group named rac1 that contains the newly created primary partition.

From the Openfiler Storage Control Center, navigate to [Volumes] / [Volume Groups]. There we would see any existing volume groups, or none as in our case. Using the Volume Group Management screen, enter the name of the new volume group (rac1), click on the checkbox in front of /dev/sdb1 to select that partition, and finally click on the 'Add volume group' button. After that we are presented with the list that now shows our newly created volume group named "rac1":

Figure: New Volume Group Created

Logical Volumes

We can now create the six logical volumes in the newly created volume group (rac1).

From the Openfiler Storage Control Center, navigate to [Volumes]/[Create New Volume]. There we will see the newly created volume group (rac1) along with its block storage statistics. Also available at the bottom of this screen is the option to create a new colume in the selected volume group. Use this screen to create the following six logical (iSCSI) volumes. After creating each logical volume, the application will point you to the 'List of Existing Volumes' screen. You will then need to click back to the 'Create New Volume' tab to create the next logical volume until all six iSCSI volumes are created:

iSCSI / Logical Volumes

Volume Name

Volume Description Required Space (MB) Filesystem Type

ocr Oracle Cluster Registry RAW Device 160 iSCSI

vot Voting Disk RAW device 64 iSCSI

asmspfile ASM SPFILE RAW Device 160 iSCSI

asm1 Oracle ASM Volume 1 90,000 iSCSI

asm2 Oracle ASM Volume 2 90,000 iSCSI

asm3 Oracle ASM Volume 3 100,000 iSCSI

In effect, we have created six iSCSI disks that can now be presented to iSCSI clients (RAC1 and RAC2) on the network.:

iSCSI Targets

At this point we have five iSCSI logical volumes. Before an iSCSI client can have access to them, however, an iSCSI target will need to be created for each of these five volumes. Each iSCSI logical volume will be mapped to a specific iSCSI target and the appropriate network access permissions to that target will be granted to both Oracle RAC nodes. For the purpose of this article, there will be a one-to-one mapping between an iSCSI logical volume and an iSCSI target.

There are three steps involved in creating and configuring an iSCSI target; create a unique Target IQN (basically, the universal name for the new iSCSI target), map one of the iSCSI logical volumes (created in the previous section) to the newly created iSCSI target, and finally, grant both of the Oracle RAC nodes access to the new iSCSI target. Please note that this process will need to be performed for each of the five iSCSI logical volumes created in the previous section.

For the purpose of this article, the following table lists the new iSCSI target names (the Target IQN) and which iSCSI logical volume it will be mapped to:

Target IQN | iSCSI Volume Name | Volume Description |

| crs | Cluster Registry Service |

| VOT | Voting Disk |

| asmspfile | SPFILE for ASM |

| asm1 | ASM |

| asm2 | ASM |

| asm3 | ASM |

We are now ready to create the five new iSCSI targets - one for each of the iSCSI logical volumes. The example below illustrates the three steps required to create a new iSCSI target by creating the Oracle Clusterware / crs target (iqn.2006-01.com.openfiler:crs). This three step process will need to be repeated for each of the five new iSCSI targets listed in the table above.

Create New Target IQN

From the Openfiler Storage Control Center, navigate to [Volumes] / [iSCSI Targets]. Verify the grey sub-tab "Target Configuration" is selected. This page allows you to create a new iSCSI target. A default value is automatically generated for the name of the new iSCSI target (better known as the "Target IQN"). An example Target IQN is "iqn.2006-01.com.openfiler:tsn.ae4683b67fd3":

Figure: Create New iSCSI Target : Default Target IQN

I prefer to replace the last segment of the default Target IQN with something more meaningful. For the first iSCSI target (Oracle Clusterware / crs), I will modify the default Target IQN by replacing the string "tsn.ae4683b67fd3" with "crs" as shown in below:

LUN Mapping

After creating the new iSCSI target, the next step is to map the appropriate iSCSI logical volumes to it. Under the "Target Configuration" sub-tab, verify the correct iSCSI target is selected in the section "Select iSCSI Target". If not, use the pull-down menu to select the correct iSCSI target and hit the "Change" button.

Next, click on the grey sub-tab named "LUN Mapping" (next to "Target Configuration" sub-tab). Locate the appropriate iSCSI logical volume (/dev/rac1/crs in this case) and click the "Map" button. You do not need to change any settings on this page

LUN Setting

LUN Mapped to target: iqn.2006-01.com.openfiler:crs

Network ACL

Before an iSCSI client can have access to the newly created iSCSI target, it need

s to be granted the appropriate permissions. Awhile back, we configured network access in Openfiler for two hosts (the Oracle RAC nodes). These are the two nodes that will need to access the new iSCSI targets through the storage (private) network. We now need to grant both of the Oracle RAC nodes access to the new iSCSI target.

Click on the grey sub-tab named "Network ACL" (next to "LUN Mapping" sub-tab). For the current iSCSI target, change the "Access" for both hosts from 'Deny' to 'Allow' and click the 'Update' button:

Make iSCSI Targets Available to Clients

Every time a new logical volume is added, we need to restart the associated service on the Openfiler server. In our case, we created iSCSI logical volumes, so we have to restart the iSCSI target (iscsi-target) service. This will make the new iSCSI targets available to all clients on the network who have privileges to access them.

To restart the iSCSI target service, use the Openfiler Storage Control Center and navigate to [Services]/[Enable/Disable]. The iSCSI target service should already be enabled (several sections back). If so, disable the service then enable it again.

The same task can be achieved through an SSH session on the Openfiler server:

openfiler1:˜# service iscsi-target restart

Stopping iSCSI target service: [ OK ]

Starting iSCSI target service: [ OK ]

Configure iSCSI Volumes on Oracle RAC Nodes

Configure the iSCSI initiator on all Oracle RAC nodes in the cluster.

iSCSI Terminology

The following terms will be used in this article.

Initiator

The driver that initiates SCSI requests to the iSCSI target.

Target Device

Represents the iSCSI storage component.

Discovery

Discovery is the process that presents the initiator with a list of available targets.

Discovery Method

The discovery method describes the way in which the iSCSI targets can be found.

Currently, 3 types of discovery methods are available:

- Internet Storage Name Service (iSNS) - Potential targets are discovered by interacting with one or more iSNS servers.

- SendTargets - Potential targets are discovered by using a discovery-address.

- Static - Static target addresses are configured.

On Solaris, the iSCSI configuration information is stored in the /etc/iscsi directory. The information contained in this directory requires no administration.

iSCSI Configuration

In this article, we will be using the SendTargets discovery method. We first need to verify that the iSCSI software packages are installed on our servers before we can proceed further.

# pkginfo SUNWiscsiu SUNWiscsir

system SUNWiscsir Sun iSCSI Device Driver (root)

system SUNWiscsiu Sun iSCSI Management Utilities (usr)

#

We will now configure the iSCSI target device to be discovered dynamically like so:

How to Configure iSCSI Target Discovery (It should be done on both RAC1 and RAC2 Nodes)

This procedure assumes that you are logged in to the local system where you want to configure access to an iSCSI target device.

1. Become superuser.

2. Configure the target device to be discovered dynamically or statically using one of the following methods:

o Configure the device to be dynamically discovered (SendTargets).

For example:

initiator# iscsiadm add discovery-address 192.168.10.12:3260

o Configure the device to be dynamically discovered (iSNS).

For example:

initiator# iscsiadm add iSNS-server 192.168.10.12:3205

o Configure the device to be statically discovered.

For example:

initiator# iscsiadm add static-config eui.5000ABCD78945E2B,192.168.10.12

3. The iSCSI connection is not initiated until the discovery method is enabled. See the next step.

4. Enable the iSCSI target discovery method using one of the following:

o If you have configured a dynamically discovered (SendTargets) device, enable the SendTargets discovery method.

o initiator# iscsiadm modify discovery --sendtargets enable

o If you have configured a dynamically discovered (iSNS) device, enable the iSNS discovery method.

o initiator# iscsiadm modify discovery --iSNS enable

o If you have configured static targets, enable the static target discovery method.

o initiator# iscsiadm modify discovery --static enable

5. Create the iSCSI device links for the local system.

6. initiator# devfsadm -i iscsi

To verify that the iSCSI devices are available on the node, we will use the format command. If you are following this article, the output of the format command should look like the following:

# format

Searching for disks...done

AVAILABLE DISK SELECTIONS:

0. c0d0 <DEFAULT cyl 7293 alt 2 hd 255 sec 63>

/pci@0,0/pci-ide@1f,2/ide@0/cmdk@0,0

1. c2t1d0 <DEFAULT cyl 157 alt 2 hd 64 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfilerrac1.ocr0001,0

2. c2t3d0 <DEFAULT cyl 61 alt 2 hd 64 sec 32>

/iscsi/disk@0000.iqn.2006-01.com.openfilerrac1.vot0001,0

3. c2t3d0 <DEFAULT cyl 157 alt 2 hd 64 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfilerrac1.asmspfile0001,0

4. c2t4d0 <DEFAULT cyl 11473 alt 2 hd 255 sec 63>

/iscsi/disk@0000iqn.2006-01.com/openfilerrac1.asm10001,0

5. c2t5d0 <DEFAULT cyl 11473 alt 2 hd 255 sec 63>

/iscsi/disk@0000iqn.2006-01.com/openfilerrac1.asm20001,0

6. c2tt6d0 <DEFAULT cyl 12746 alt 2 hd 255 sec 63>

/iscsi/disk@0000iqn.2006-01.com.openfilerrac1.asm30001,0

Specify disk (enter its number):

Now, we need to create partitions on the iSCSI volumes. We will use the procedure outlined in Metalink Note 367715.1 for this. The main point to take in from this Metalink doc is that when formatting the devices to be used for the OCR and the Voting Disk files, the disk slices to be used must skip the first cylinder (cylinder 0) to avoid overwriting the disk VTOC (Volume Table of Contents). The VTOC is a special area of disk set aside for aside for storing information about the disk's controller, geometry and slices.

If this is not done, the root.sh script which is run after the Clusterware installation will fail as it will not be able to initialize the ocr voting disk.

Also, if the partition on which the ASM SPFILE is going to be created on does not skip the first cylinder to avoid overwriting the VTOC, then the following error message will appear when running DBCA

Below I am running the format command from the solaris1 node only.

# format

Searching for disks...done

AVAILABLE DISK SELECTIONS:

0. c0d0 <DEFAULT cyl 7293 alt 2 hd 255 sec 63>

/pci@0,0/pci-ide@1f,2/ide@0/cmdk@0,0

1. c2t1d0 <DEFAULT cyl 157 alt 2 hd 64 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Arac1.ocr0001,0

2. c2t2d0 <DEFAULT cyl 61 alt 2 hd 64 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Arac1.vot0001,0

3. c2t3d0 <DEFAULT cyl 157 alt 2 hd 64 sec 32>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Arac1.asmspfile0001,0

4. c2t4d0 <DEFAULT cyl 11473 alt 2 hd 255 sec 63>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Arac1.asm10001,0

5. c2t5d0 <DEFAULT cyl 11473 alt 2 hd 255 sec 63>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Arac1.asm20001,0

6. c2t6d0 <DEFAULT cyl 12746 alt 2 hd 255 sec 63>

/iscsi/disk@0000iqn.2006-01.com.openfiler%3Arac1.asm30001,0

Specify disk (enter its number): 1

selecting c2t1d0

[disk formatted]

FORMAT MENU

disk - select a disk

type - select (define) a disk type

partition - select (define) a partition table

current - describe the current disk

format - format and analyze the disk

fdisk - run the fdisk program

repair - repair a defective sector

label - write label to the disk

analyze - surface analysis

defect - defect list management

backup - search for backup labels

verify - read and display labels

save - save new disk/partition definitions

inquiry - show vendor, product and revision

volname - set 8-character volume name

!<cmd> - execute <cmd>, then return

format> partition

Please run fdisk first

format> fdisk

No fdisk table exists. The default partition for the disk is:

a 100% "SOLARIS system" partition

Type "y" to accept the default partition, otherwise type "n" to edit the partition table.

y

format> partition

PARTITION MENU:

0 - change '0' partition

1 - change '1' partition

2 - change '2' partition

3 - change '3' partition

4 - change '4' partition

5 - change '5' partition

6 - change '6' partition

7 - change '7' partition

select - select a predefined table

modify - modify a predefined partition table

name - name the current table

print - display the current table

label - write the partition map and label to the disk

!<cmd> - execute <cmd>, then return

quit

partition> print

Current partition table (original):

Total disk cylinders available: 156 + 2 (reserved cylinders)

Part Tag Flag Cylinders Size Blocks

0 unassigned wm 0 0 (0/0/0) 0

1 unassigned wm 0 0 (0/0/0) 0

2 backup wu 0 - 156 157.00MB (157/0/0) 321536

3 unassigned wm 0 0 (0/0/0) 0

4 unassigned wm 0 0 (0/0/0) 0

5 unassigned wm 0 0 (0/0/0) 0

6 unassigned wm 0 0 (0/0/0) 0

7 unassigned wm 0 0 (0/0/0) 0

8 boot wu 0 - 0 1.00MB (1/0/0) 2048

9 unassigned wm 0 0 (0/0/0) 0

partition> 1

Part Tag Flag Cylinders Size Blocks

1 unassigned wm 0 0 (0/0/0) 0

Enter partition id tag[unassigned]:

Enter partition permission flags[wm]:

Enter new starting cyl[0]: 3

Enter partition size[0b, 0c, 3e, 0.00mb, 0.00gb]: 153c

partition> label

Ready to label disk, continue? y

partition> quit

format> disk 2

selecting c2t2d0

[disk formatted]

format> fdisk

No fdisk table exists. The default partition for the disk is:

a 100% "SOLARIS System" partition

Type "y" to accept the default partition, otherwise type "n" to edit the

partition table.

y

format> partition

partition> print

Current partition table (original):

Total disk cylinders available: 60 + 2 (reserved cylinders)

Part Tag Flag Cylinders Size Blocks

0 unassigned wm 0 0 (0/0/0) 0

1 unassigned wm 0 0 (0/0/0) 0

2 backup wu 0 - 60 61.00MB (61/0/0) 124928

3 unassigned wm 0 0 (0/0/0) 0

4 unassigned wm 0 0 (0/0/0) 0

5 unassigned wm 0 0 (0/0/0) 0

6 unassigned wm 0 0 (0/0/0) 0

7 unassigned wm 0 0 (0/0/0) 0

8 boot wu 0 - 0 1.00MB (1/0/0) 2048

9 unassigned wm 0 0 (0/0/0) 0

partition> 1

Part Tag Flag Cylinders Size Blocks

1 unassigned wm 0 0 (0/0/0) 0

Enter partition id tag[unassigned]:

Enter partition permission flags[wm]:

Enter new starting cyl[0]: 3

Enter partition size[0b, 0c, 3e, 0.00mb, 0.00gb]: 57c

partition> label

Ready to label disk, continue? y

partition> quit

format> disk 3

selecting c2t3d0

[disk formatted]

format> fdisk

No fdisk table exists. The default partition for the disk is:

a 100% "SOLARIS System" partition

Type "y" to accept the default partition, otherwise type "n" to edit the

partition table.

y

format> partition

partition> print

Current partition table (original):

Total disk cylinders available: 156 + 2 (reserved cylinders)

Part Tag Flag Cylinders Size Blocks

0 unassigned wm 0 0 (0/0/0) 0

1 unassigned wm 0 0 (0/0/0) 0

2 backup wu 0 - 156 157.00MB (157/0/0) 321536

3 unassigned wm 0 0 (0/0/0) 0

4 unassigned wm 0 0 (0/0/0) 0

5 unassigned wm 0 0 (0/0/0) 0

6 unassigned wm 0 0 (0/0/0) 0

7 unassigned wm 0 0 (0/0/0) 0

8 boot wu 0 - 0 1.00MB (1/0/0) 2048

9 unassigned wm 0 0 (0/0/0) 0

partition> 1

Part Tag Flag Cylinders Size Blocks

1 unassigned wm 0 0 (0/0/0) 0

Enter partition id tag[unassigned]:

Enter partition permission flags[wm]:

Enter new starting cyl[0]: 3

Enter partition size[0b, 0c, 3e, 0.00mb, 0.00gb]: 153c

partition> label

Ready to label disk, continue? y

partition> quit

format> disk 4

selecting c2t4d0

[disk formatted]

format> fdisk

No fdisk table exists. The default partition for the disk is:

a 100% "SOLARIS System" partition

Type "y" to accept the default partition, otherwise type "n" to edit the

partition table.

y

format> partition

partition> print

Current partition table (original):

Total disk cylinders available: 11472 + 2 (reserved cylinders)

Part Tag Flag Cylinders Size Blocks

0 unassigned wm 0 0 (0/0/0) 0

1 unassigned wm 0 0 (0/0/0) 0

2 backup wu 0 - 11472 87.89GB (11473/0/0) 184313745

3 unassigned wm 0 0 (0/0/0) 0

4 unassigned wm 0 0 (0/0/0) 0

5 unassigned wm 0 0 (0/0/0) 0

6 unassigned wm 0 0 (0/0/0) 0

7 unassigned wm 0 0 (0/0/0) 0

8 boot wu 0 - 0 7.84MB (1/0/0) 16065

9 unassigned wm 0 0 (0/0/0) 0

partition> 1

Part Tag Flag Cylinders Size Blocks

1 unassigned wm 0 0 (0/0/0) 0

Enter partition id tag[unassigned]:

Enter partition permission flags[wm]:

Enter new starting cyl[0]: 3

Enter partition size[0b, 0c, 3e, 0.00mb, 0.00gb]: 11468c

partition> label

Ready to label disk, continue? y

partition> quit

format> disk 5

selecting c2t5d0

[disk formatted]

format> fdisk

No fdisk table exists. The default partition for the disk is:

a 100% "SOLARIS System" partition

Type "y" to accept the default partition, otherwise type "n" to edit the

partition table.

y

format> partition

partition> print

Current partition table (original):

Total disk cylinders available: 11472 + 2 (reserved cylinders)

Part Tag Flag Cylinders Size Blocks

0 unassigned wm 0 0 (0/0/0) 0

1 unassigned wm 0 0 (0/0/0) 0

2 backup wu 0 - 11472 87.89GB (11473/0/0) 184313745

3 unassigned wm 0 0 (0/0/0) 0

4 unassigned wm 0 0 (0/0/0) 0

5 unassigned wm 0 0 (0/0/0) 0

6 unassigned wm 0 0 (0/0/0) 0

7 unassigned wm 0 0 (0/0/0) 0

8 boot wu 0 - 0 7.84MB (1/0/0) 16065

9 unassigned wm 0 0 (0/0/0) 0

partition> 1

Part Tag Flag Cylinders Size Blocks

1 unassigned wm 0 0 (0/0/0) 0

Enter partition id tag[unassigned]: 3

`3' not expected.

Enter partition id tag[unassigned]:

Enter partition permission flags[wm]:

Enter new starting cyl[0]: 3

Enter partition size[0b, 0c, 3e, 0.00mb, 0.00gb]: 11468c

partition> label

Ready to label disk, continue? y

partition> quit

format> disk 6

selecting c2t6d0

[disk formatted]

format> fdisk

No fdisk table exists. The default partition for the disk is:

a 100% "SOLARIS System" partition

Type "y" to accept the default partition, otherwise type "n" to edit the

partition table.

y

format> partition

partition> print

Current partition table (original):

Total disk cylinders available: 12745 + 2 (reserved cylinders)

Part Tag Flag Cylinders Size Blocks

0 unassigned wm 0 0 (0/0/0) 0

1 unassigned wm 0 0 (0/0/0) 0

2 backup wu 0 - 12745 97.64GB (12746/0/0) 204764490

3 unassigned wm 0 0 (0/0/0) 0

4 unassigned wm 0 0 (0/0/0) 0

5 unassigned wm 0 0 (0/0/0) 0

6 unassigned wm 0 0 (0/0/0) 0

7 unassigned wm 0 0 (0/0/0) 0

8 boot wu 0 - 0 7.84MB (1/0/0) 16065

9 unassigned wm 0 0 (0/0/0) 0

partition> 1

Part Tag Flag Cylinders Size Blocks

1 unassigned wm 0 0 (0/0/0) 0

Enter partition id tag[unassigned]:

Enter partition permission flags[wm]:

Enter new starting cyl[0]: 3

Enter partition size[0b, 0c, 3e, 0.00mb, 0.00gb]: 12740c

partition> label

Ready to label disk, continue? y

partition> quit

format> quit

Create oracle User and Directories

Perform the following tasks on all Oracle nodes in the cluster.

We will create the dba group and the oracle user account along with all appropriate directories.

# mkdir -p /u01/app

# groupadd -g 115 dba

# useradd -u 175 -g 115 -d /u01/app/oracle -m -s /usr/bin/bash -c "Oracle Software Owner" oracle

# chown -R oracle:dba /u01

# passwd oracle

# su - oracle

When you are setting the oracle environment variables for each Oracle RAC node, ensure to assign each RAC node a unique Oracle SID. For this example, we used:

- RAC1: ORACLE_SID=orcl1

- RAC2: ORACLE_SID=orcl2

After creating the oracle user account on both nodes, ensure that the environment is setup correctly by using the following .bash_profile (Please note that the .bash_profile will not exist on Solaris; you will have to create it).

# .bash_profile

umask 022

export ORACLE_BASE=/export/home/oracle

export ORACLE_HOME=$ORACLE_BASE/product/10.2.0/db_1

export ORA_CRS_HOME=$ORACLE_BASE/product/crs

# Each RAC node must have a unique ORACLE_SID. (i.e. orcl1, orcl2,...)

export ORACLE_SID=orcl1

export PATH=.:${PATH}:$HOME/bin:$ORACLE_HOME/bin

export PATH=${PATH}:/usr/bin:/bin:/usr/local/bin:/usr/sfw/bin

export TNS_ADMIN=$ORACLE_HOME/network/admin

export ORA_NLS10=$ORACLE_HOME/nls/data

export LD_LIBRARY_PATH=$ORACLE_HOME/lib

export LD_LIBRARY_PATH=${LD_LIBRARY_PATH}:$ORACLE_HOME/oracm/lib

export CLASSPATH=$ORACLE_HOME/JRE

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/jlib

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/rdbms/jlib

export CLASSPATH=${CLASSPATH}:$ORACLE_HOME/network/jlib

export TEMP=/tmp

export TMPDIR=/tmp

Configure the Solaris Servers for Oracle

Perform the following configuration procedure on both Oracle RAC nodes in the cluster

This section focuses on configuring both Oracle RAC Solaris servers - getting each one prepared for the Oracle RAC 10g installation.

Setting Device Permissions

The devices we will be using for the various components of this article (e.g. the OCR and the voting disk) must have the appropriate ownershop and permissions set on them before we can proceed to the installation stage. We will the set the permissions and ownerships using the chown and chmod commands as follows: (this must be done as the root user)

# chown root:dba /dev/rdsk/c2t1d0s1

# chmod 660 /dev/rdsk/c2t1d0s1

# chown oracle:dba /dev/rdsk/c2t2d0s1

# chmod 660 /dev/rdsk/c2t2d0s1

# chown oracle:dba /dev/rdsk/c2t3d0s1

# chown oracle:dba /dev/rdsk/c2t4d0s1

# chown oracle:dba /dev/rdsk/c2t5d0s1

# chown oracle:dba /dev/rdsk/c2t6d0s1

These permissions will be persistent accross reboots. No further configuration needs to be performed with the permissions.

Creating Symbolic Links for the SSH Binaries

The Oracle Universal Installer and configuration assistants (such as NETCA) will look for the SSH binaries in the wrong location on Solaris. Even if the SSH binaries are included in your path when you start these programs, they will still look for the binaries in the wrong location. On Solaris, the SSH binaries are located in the /usr/bin directory by default. The Oracle Universal Installer will throw an error stating that it cannot find the ssh or scp binaries. For this article, my workaround was to simply create s symbolic link in the /usr/local/bin directory for these binaries. This workaround was quick and easy to implement and worked perfectly.

# ln -s /usr/bin/ssh /usr/local/bin/ssh

# ln -s /usr/bin/scp /usr/local/bin/scp

Remove any STTY Commands

During an Oracle Clusterware instllation, the Oracle Universal Installer uses SSH perform remote operations on other nodes. During the installation, hidden files on the system (for example, .bashrc or .cshrc) will cause installlation errors if they contain stty commands.

To avoid this problem, you must modify these files to suppress all output on STDERR, as in the following examples:

- Bourne, bash, or Korn shell:

· if [ -t 0 ]; then

· stty intr ^C

· fi

- C shell:

· test -t 0

· if ($status==0) then

· stty intr ^C

· endif

Setting Kernel Parameters

In Solaris 10, there is a new way of setting kernel parameters. The old Solaris 8 and 9 way of setting kernel parameters by editing the /etc/system file is deprecated. A new method of setting kernel parameters exists in Solaris 10 using the resource control facility and this method does not require the system to be re-booted for the change to take effect.

Configure RAC Nodes for SSH Equivalance

Perform the following configuration procedures on both Oracle RAC nodes in the cluster.

Before you can install and use Oracle RAC, you must configure either secure shell (SSH) or remote shell (RSH) for the oracle user account both of the Oracle RAC nodes in the cluster. The goal here is to setup user equivalence for the oracle user account. User equivalence enables the oracle user account to access all other nodes in the cluster without the need for a password. This can be configured using either SSH or RSH where SSH is the preferred method.

In this article, we will just discuss the secure shell method for establishing user equivalency. For steps on how to use the remote shell method, please see this section of Jeffrey Hunter's original article.

Creating RSA Keys on Both Oracle RAC Nodes

The first step in configuring SSH is to create RSA key pairs on both Oracle RAC nodes in the cluster. The command to do this will create a public and private key for the RSA algorithm. The content of the RSA public key will then need to be copied into an authorized key file which is then distributed to both of the Oracle RAC nodes in the cluster.

Use the following steps to create the RSA key pair. Please note that these steps will need to be completed on both Oracle RAC nodes in the cluster:

- Logon as the

oracleuser account.

# su - oracle - If necessary, create the

.sshdirectory in theoracleuser's home directory and set the correct permissions on it:

$ mkdir -p ˜/.ssh

$ chmod 700 ˜/.ssh - Enter the following command to generate an RSA key pair (public and private key) for version 3 of the SSH protocol:

$ /usr/bin/ssh-keygen -t rsa

At the prompts:

- Accept the default location for the key files.

- Enter and confirm a pass phrase. This should be different from the

oracleuser account password; however it is not a requirement i.e. you do not have to enter any password.

This command will write the public key to the ˜/.ssh/id_rsa.pub file and the private key to the ˜/.ssh/id_rsa file. Note that you should never distribute the private key to anyone.

- Repeat the above steps for each Oracle RAC node in the cluster.

Now that both Oracle RAC nodes contain a public and private key pair for RSA, you will need to create an authorized key file on one of the nodes. An authorized key file is nothing more than a single file that contains a copy of everyone's (every node's) RSA public key. Once the authorized key file contains all of the public keys, it is then distributed to all other nodes in the RAC cluster.

Complete the following steps on one of the nodes in the cluster to create and then distribute the authorized key file. For the purpose of this article, I am using solaris1.

- First, determine if an authorized key file already exists on the node (˜/.ssh/authorized_keys). In most cases this will not exist since this article assumes you are working with a new install. If the file doesn't exist, create it now:

$ touch ˜/.ssh/authorized_keys

$ cd ˜/ssh - In this step, use SSH to copy the content of the ˜/.ssh/id_rsa.pub public key from each Oracle RAC node in the cluster to the authorized key file just created (˜/.ssh/authorized_keys). Again, this will be done from solaris1. You will be prompted for the

oracleuser account password for both Oracle RAC nodes accessed. Notice that when using SSH to access the node you are on (solaris1), the first time it prompts for theoracleuser account password. The second attempt at accessing this node will prompt for the pass phrase used to unlock the private key. For any of the remaining nodes, it will always ask for theoracleuser account password.

The following example is being run fromsolaris1and assumes a 2-node cluster, with nodessolaris1andsolaris2:

$ ssh rac1 cat ˜/.ssh/id_rsa.pub >> ˜/.ssh/authorized_keys

The authenticity of host 'rac1 (192.168.3.50)' can't be established.

RSA key fingerprint is a5:de:ee:2a:d8:10:98:d7:ce:ec:d2:f9:2c:64:2e:e5

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac1,192.168.3.50' (RSA) to the list of known hosts.

oracle@rac1's password:

$ ssh rac2 cat ˜/.ssh/id_rsa.pub >> ˜/.ssh/authorized_keys

The authenticity of host 'rac2 (192.168.3.51)' can't be established.

RSA key fingerprint is d2:99:ed:a2:7b:10:6f:3e:e1:da:4a:45:d5:34:33:5b

Are you sure you want to continue connecting (yes/no)? yes

Warning: Permanently added 'rac2,192.168.3.52' (RSA) to the list of known hosts.

oracle@rac2's password:

Note: The first time you use SSH to connect to a node from a particular system, you may see a message similar to the following:

The authenticity of host 'rac1 (192.168.3.50)' can't be established.

RSA key fingerprint is a5:de:ee:2a:d8:10:98:d7:ce:ec:d2:f9:2c:64:2e:e5

Are you sure you want to continue connecting (yes/no)? yes

Enteryesat the prompt to continue. You should not see this message again when you connect from this system to the same node. - At this point, we have the content of the RSA public key from every node in the cluster in the authorized key file

(˜/.ssh/authorized_keys)on solaris1. We now need to copy it to the remaining nodes in the cluster. In out two-node cluster example, the only remaining node is solaris2. Use thescpcommand to copy the authorized key file to all remaining nodes in the cluster:

$ scp ˜/.ssh/authorized_keys rac2:.ssh/authorized_keys

oracle@rac2's password:

authorized_keys 100% 1534 1.2KB/s 00:00 - Change the permission of the authorized key file for both Oracle RAC nodes in the cluster by logging into the node and running the following:

$ chmod 600 ˜/.ssh/authorized_keys - At this point, if you use ssh to log in or run a command on another node, you are prompted for the pass phrase that you specified when you created the RSA key. For example, test the following from solaris1:

$ ssh rac1 hostname

Enter passphrase for key '/export/home/oracle/.ssh/id_rsa':

rac1

$ ssh rac2 hostname

Enter passphrase for key '/export/home/oracle/.ssh/id_rsa':

rac2

Note: If you see any other messages or text, apart from the host name, then the Oracle installation can fail. Make any changes required to ensure that only the host name is displayed when you enter these commands. You should ensure that any part of a login script(s) that generate any output, or ask any questions, are modified so that they act only when the shell is an interactive shell.

Enabling SSH User Equivalency for the Current Shell Session

When running the OUI, it will need to run the secure shell tool commands (ssh and scp) without being prompted for a password. Even though SSH is configured on both Oracle RAC nodes in the cluster, using the secure shell tool commands will still prompt for a password. Before running the OUI, you need to enable user equivalence for the terminal session you plan to run the OUI from. For the purpose of this article, all Oracle installations will be performed from solaris1.

User equivalence will need to be enabled on any new terminal shell session before attempting to run the OUI. If you log out and log back in to the node you will be performing the Oracle installation from, you must enable user equivalence for the terminal session as this is not done by default.

To enable user equivalence for the current terminal shell session, perform the following steps:

- Logon to the node where you want to run the OUI from (solaris1) as the

oracleuser.

# su - oracle - Enter the following commands:

$ exec ssh-agent $SHELL

$ ssh-add

Enter passphrase for /export/home/oracle/.ssh/id_rsa:

Identity added: /export/home/oracle/.ssh/id_rsa (/export/home/oracle/.ssh/id_rsa)

At the prompts, enter the pass phrase for each key that you generated. - If SSH is configured correctly, you will be able to use the ssh and scp commands without being prompted for a password or pass phrase from this terminal session:

$ ssh rac1 "date;hostname"

Wed Sep 2 17:12 CST 2009

rac1

$ ssh rac2 "date;hostname"

Wed Sep 2 17:12:55 CST 2009

rac2

Note: The commands above should display the date set on both Oracle RAC nodes along with its hostname. If any of the nodes prompt for a password or pass phrase then verify that the ˜/.ssh/authorized_keys file on that node contains the correct public keys. Also, if you see any other messages or text, apart from the date and hostname, then the Oracle installation can fail. Make any changes required to ensure that only the date and hostname is displayed when you enter these commands. You should ensure that any part of a login script(s) that generate any output, or ask any questions, are modified so that they act only when the shell is an interactive shell. - The Oracle Universal Installer is a GUI interface and requires the use of an X server. From the terminal session enabled for user equivalence (the node you will be performing the Oracle installations from), set the environment variable

DISPLAYto a valid X Windows display:

Bourne, Korn, and Bash shells:

$ DISPLAY=<Any X-Windows Host>:0

$ export DISPLAY

C shell:

$ setenv DISPLAY <Any X-Windows Host>"0 - You must run the Oracle Universal Installer from this terminal session or remember to repeat the steps to enable user equivalence (steps 2, 3, and 4 from this section) before you start the OUI from a different terminal session.

Download Oracle RAC 10g Software

The following download procedurs only need to be performed on one node in the cluster

The next logical step is to install Oracle Clusterware Release 2 (10.2.0.2.0) and Oracle Database 10g Release 2 (10.2.0.2.0). For this article, I chose not to install the Companion CD as Jeffrey Hunter did in his article. This is a matter of choice - if you want to install the companion CD, by all means do.

In this section, we will be downloading and extracting the required software from Oracle to only one of the Solaris nodes in the RAC cluster - namely solaris1. This is the machine where I will be performing all of the Oracle installs from.

Login to the node that you will be performing all of the Oracle installations from (rac1) as the oracle user account. In this example, I will be downloading the required Oracle software to solaris1 and saving them to /export/home/oracle/orainstall.

Oracle Clusterware Release 2 (10.2.0.2.0) for Solaris 10 x86

First, download the Oracle Clusterware Release 2 software for Solaris 10 x86.

Oracle Clusterware Release 2 (10.2.0.2.0)

Oracle Database 10g Release 2 (10.2.0.2.0) for Solaris 10 x86

First, download the Oracle Database Release 2 software for Solaris 10 x86.

Oracle Database 10g Release 2 (10.2.0.2.0)

As the oracle user account, extract the two packages you downloaded to a temporary directory. In this example, I will use /u01/app/oracle/orainstall.

Extract the Clusterware package as follows:

# su - oracle

$ cd ˜oracle/orainstall

$ unzip 10202_clusterware_solx86.zip

Then extract the Oracle10g Database software:

$ cd ˜oracle/orainstall

$ unzip 10202_database_solx86.zip

Pre-Installation Tasks for Oracle RAC 10g

Perform the following checks on all Oracle RAC nodes in the cluster

The following packages must be installed on each server before you can continue: (If any of the package is missing, it may be found in the Installer CD of the Solaris)

SUNWlibms

SUNWtoo

SUNWi1cs

SUNWi15cs

SUNWxwfnt

SUNWxwplt

SUNWmfrun

SUNWxwplr

SUNWxwdv

SUNWgcc

SUNWbtool

SUNWi1of

SUNWhea

SUNWlibm

SUNWsprot

SUNWuiu8

To check whether any of these required packages are installed on your system, use the pkginfo -i package_name commad as follows:

# pkginfo -i SUNWlibms

system SUNWlibms Math & Microtasking Libraries (Usr)

#

If you need to install any of the above packages, use the pkgadd -d package_name command.

Install Oracle 10g Clusterware Software

Perform the following installation procedures from only one of the Oracle RAC nodes in the cluster (solaris1). The Oracle Clusterware software will be installed to both of the Oracle RAC nodes in the cluster by the OUI.

You are now ready to install the "cluster" part of the environment - the Oracle Clusterware. In a previous section, you downloaded and extracted the install files for Oracle Clusterware to solaris1 in the directory /export/home/oracle/orainstall/clusterware. This is the only node from which you need to perform the install.

During the installation of Oracle Clusterware, you will be asked for the nodes involved and to configure in the RAC cluster. Once the actual installation starts, it will copy the required software to all nodes using the remote access we configured in the section "Configure RAC Nodes for SSH User Equivalance".

After installing Oracle Clusterware, the Oracle Universal Installer (OUI) used to install the Oracle10g database software (next section) will automatically recognize these nodes. Like the Oracle Clusterware install you will be performing in this section, the Oracle Database 10g software only needs to be run from one node. The OUI will copy the software packages to all nodes configured in the RAC cluster.

Oracle Clusterware - Some Background

libskgxn.so: This library contains the routines used by the Oracle server to maintain node membership services. In short, these routines let Oracle know what nodes are in the cluster. Likewise, if Oracle wants to evict (fence) a node, it will call a routine in this library.

libskgxp.so: This library contains the Oracle server routines used for communication between instances (e.g. Cache Fusion CR sends, lock converts, etc.).

Verifying Terminal Shell Environment

Before starting the OUI, you should first verify you are logged onto the server you will be running the installer from ( i.e. solaris1) then run the xhost command as root from the console to allow X server connections. Next, login as the oracle user account. If you are using a remote client to connect to the node performing the installationg (SSH/Telnet to solaris1 from a workstation configured with an X server), you will need to set the DISPLAY variable to point to your local workstation. Finally, verify remote access/user equivalence to all nodes in the cluster.

Verify Server and Enable X Server Access

# hostname

solaris1

# xhost +

access control disabled, clients can connect from any host

Login as the oracle User Account and Set DISPLAY (if necessary)

# su - oracle

$ # IF YOU ARE USING A REMOTE CLIENT TO CONNECT TO THE

$ # NODE PERFORMING THE INSTALL

# DISPLAY=<you local workstation>:0.0

$ export DISPLAY

Verify Remote Access/User Equivalence

Verify you are able to run the Secure Shell commands (ssh or scp) on the Solaris server you will be running the OUI from against all other Solaris servers in the cluster without being prompted for a password.

When using the secure shell method (which is what we are using in this article), user equivalence will need to enabled on any new terminal shell session before attempting to run the OUI. To enable user equivalence for the current terminal shell session, perform the following steps remembering to enter the pass phrase for the RSA key that generated when prompted:

$ exec ssh-agent $SHELL

$ ssh-add

Enter passphrase for /export/home/oracle/.ssh/id_rsa:

Identity added: /export/home/oracle/.ssh/id_rsa (/u01/app/oracle/.ssh/id_rsa)

$ ssh rac1 "date;hostname"

Wed Sep 2 16:45:43 CST 2009

rac1

$ ssh rac2 "data;hostname"

Wed Sep 2 16:46:24 CST 2009

rac2

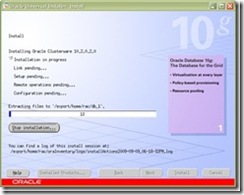

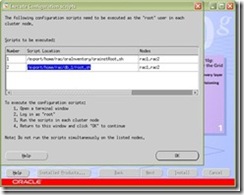

Installing Clusterware

Perform the following tasks to install the Oracle Clusterware:

$ /export/home/oracle/stage/clusterware/runInstaller

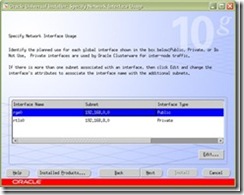

Following are the steps (Screen Shots)

Verify Oracle Clusterware Installation

After the installation of Oracle Clusterware, we can run through several tests to verify the install was successful. Run the following commands on both nodes in the RAC Cluster

Check Cluster Nodes

$ /export/home/rac/product/crs/bin/olsnodes -n

rac1

rac2

Check Oracle Clusterware Auto-Start Scripts

$ ls -l /etc/init.d/init.*[d,s]

-r-xr-xr-x 1 root root 2236 Jan 26 18:49 init.crs

-r-xr-xr-x 1 root root 4850 Jan 26 18:49 init.crsd

-r-xr-xr-x 1 root root 41163 Jan 26 18:49 init.cssd

-r-xr-xr-x 1 root root 3190 Jan 26 18:49 init.evmd

Install Oracle Database 10g Release 2 Software

Install the Oracle Database 10g Release 2 software with the following:

$ /export/home/oracle/stage/database/runInstaller –ignoreSysPrereqs

Following are the Screen shots of the installation of Oracle 10g Software:

Listener Configuration (Netca)

Create the Oracle Cluster Database

The database creation process should only be performed from one of the Oracle RAC nodes in the cluster (rac1).

We will use DBCA to create the clustered database.

Verifying Terminal Shell Environment

As discussed in the previous section, the terminal shell environment needs to be configured for remote access and user equivalence to all nodes in the cluster before running the Database Configuration Assistant (DBCA). Note that you can utilize the same terminal shell session used in the previous section which in this case, you do not have to take any of the actions described below with regards to setting up remote access and the DISPLAY variable.

Login as the oracle User Account and Set DISPLAY (if necessary)

# su - oracle

$ # IF YOU ARE USING A REMOTE CLIENT TO CONNECT TO THE

$ # NODE PERFORMING THE INSTALL

# DISPLAY=<you local workstation>:0.0

$ export DISPLAY

Verify Remote Access/User Equivalence

Verify you are able to run the Secure Shell commands (ssh or scp) on the Solaris server you will be running the OUI from against all other Solaris servers in the cluster without being prompted for a password.

When using the secure shell method (which is what we are using in this article), user equivalence will need to enabled on any new terminal shell session before attempting to run the OUI. To enable user equivalence for the current terminal shell session, perform the following steps remembering to enter the pass phrase for the RSA key that generated when prompted:

Create the ASM Instance:

Migrate to ASM.

You must use RMAN to migrate the data files to ASM disk groups. All data files will be migrated to the newly created disk group,RAC1. The redo logs and control files are created in RAC1. In a production environment, you should store redo logs on different set of disks and disk controllers from the rest of the Oracle data files.

SQL> connect sys/sys@orcl as sysdba

Connected.

SQL> alter system set db_create_file_dest=’+RAC1’;

System altered.

SQL> alter system set control_files='+RAC1/cf1.dbf' scope=spfile;

System altered.

SQL> shutdown immediate;

[oracle@salmon1]$ rman target /

RMAN> startup nomount;

Oracle instance started

Total System Global Area 419430400 bytes

Fixed Size 779416 bytes

Variable Size 128981864 bytes

Database Buffers 289406976 bytes

Redo Buffers 262144 bytes

RMAN> restore controlfile from '/export/home/oracle/orcl/control01.ctl';

Starting restore at 26-MAY-05

using target database controlfile instead of recovery catalog

allocated channel: ORA_DISK_1

channel ORA_DISK_1: sid=160 devtype=DISK

channel ORA_DISK_1: copied controlfile copy

output filename=+RAC1/cf1.dbf

Finished restore at 26-MAY-05

RMAN> alter database mount;

database mounted

released channel: ORA_DISK_1

RMAN> backup as copy database format '+RAC1';

Starting backup at 26-MAY-05

allocated channel: ORA_DISK_1

channel ORA_DISK_1: sid=160 devtype=DISK

channel ORA_DISK_1: starting datafile copy

input datafile fno=00001 name=/export/home/oracle/orcl/system01.dbf

output filename=+RAC1/orcl/datafile/system.257.1 tag=TAG20050526T073442 recid=1 stamp=559294642

channel ORA_DISK_1: datafile copy complete, elapsed time: 00:02:49

channel ORA_DISK_1: starting datafile copy

input datafile fno=00003 name=/export/home/oracle/orcl/sysaux01.dbf

output filename=+RAC1/orcl/datafile/sysaux.258.1 tag=TAG20050526T073442 recid=2 stamp=559294735

channel ORA_DISK_1: datafile copy complete, elapsed time: 00:01:26

channel ORA_DISK_1: starting datafile copy

input datafile fno=00002 name=/export/home/oracle/orcl/undotbs01.dbf

output filename=+RAC1/orcl/datafile/undotbs1.259.1 tag=TAG20050526T073442 recid=3 stamp=559294750

channel ORA_DISK_1: datafile copy complete, elapsed time: 00:00:15

channel ORA_DISK_1: starting datafile copy

input datafile fno=00004 name=/export/home/oracle/orcl/users01.dbf

output filename=+RAC1/orcl/datafile/users.260.1 tag=TAG20050526T073442 recid=4 stamp=559294758

channel ORA_DISK_1: datafile copy complete, elapsed time: 00:00:07

channel ORA_DISK_1: starting datafile copy

copying current controlfile

output filename=+RAC1/orcl/controlfile/backup.261.1 tag=TAG20050526T073442 recid=5 stamp=559294767

channel ORA_DISK_1: datafile copy complete, elapsed time: 00:00:08

Finished backup at 26-MAY-05

RMAN> switch database to copy;

datafile 1 switched to datafile copy "+RAC1/orcl/datafile/system.257.1"

datafile 2 switched to datafile copy "+RAC1/orcl/datafile/undotbs1.259.1"

datafile 3 switched to datafile copy "+RAC1/orcl/datafile/sysaux.258.1"

datafile 4 switched to datafile copy "+RAC1/orcl/datafile/users.260.1"

RMAN> alter database open;

database opened

RMAN> exit

SQL> connect sys/sys@orcl as sysdba

Connected.

SQL> select tablespace_name, file_name from dba_data_files;

TABLESPACE FILE_NAME

--------------------- -----------------------------------------

USERS +RAC1/orcl/datafile/users.260.1

SYSAUX +RAC1/orcl/datafile/sysaux.258.1

UNDOTBS1 +RAC1/orcl/datafile/undotbs1.259.1

SYSTEM +RAC1/orcl/datafile/system.257.1

2l. Migrate temp tablespace to ASM.

SQL> alter tablespace temp add tempfile size 100M;

Tablespace altered.

SQL> select file_name from dba_temp_files;

FILE_NAME

-------------------------------------

+RAC1/orcl/tempfile/temp.264.3

Migrate redo logs to ASM.

Drop existing redo logs and recreate them in ASM disk group, RAC1.

SQL> alter system set db_create_online_log_dest_1='+RAC1';

System altered.

SQL> alter system set db_create_online_log_dest_2='+RAC1';

System altered.

SQL> select group#, member from v$logfile;

GROUP# MEMBER

--------------- ----------------------------------

1 /u03/oradata/orcl/redo01.log

2 /u03/oradata/orcl/redo02.log

SQL> alter database add logfile group 3 size 10M;

Database altered.

SQL> alter system switch logfile;

System altered.

SQL> alter database drop logfile group 1;

Database altered.

SQL> alter database add logfile group 1 size 100M;

Database altered.

SQL> alter database drop logfile group 2;

Database altered.

SQL> alter database add logfile group 2 size 100M;

Database altered.

SQL> alter system switch logfile;

System altered.

SQL> alter database drop logfile group 3;

Database altered.

SQL> select group#, member from v$logfile;

GROUP# MEMBER

--------------- ----------------------------------------

1 +RAC1/orcl/onlinelog/group_1.265.3

1 +RAC1/orcl/onlinelog/group_1.257.1

2 +RAC1/orcl/onlinelog/group_2.266.3

2 +RAC1/orcl/onlinelog/group_2.258.1

Create pfile from spfile.

Create and retain a copy of the database pfile. You'll add more RAC specific parameters to the pfile later, in the Post Installation.

SQL> connect sys/sys@orcl as sysdba

Connected.

SQL> create pfile='/tmp/tmppfile.ora' from spfile;

File created.

Add additional control file.

If an additional control file is required for redundancy, you can create it in ASM as you would on any other filesystem.

SQL> connect sys/sys@orcl as sysdba

Connected to an idle instance.

SQL> startup mount

ORACLE instance started.

Total System Global Area 419430400 bytes

Fixed Size 779416 bytes

Variable Size 128981864 bytes

Database Buffers 289406976 bytes

Redo Buffers 262144 bytes

Database mounted.

SQL> alter database backup controlfile to '+RAC1/cf2.dbf';

Database altered.

SQL> alter system set control_files='+RAC1/cf1.dbf ','+RAC1/cf2.dbf' scope=spfile;

System altered.

SQL> shutdown immediate;

ORA-01109: database not open

Database dismounted.

ORACLE instance shut down.

SQL> startup

ORACLE instance started.

Total System Global Area 419430400 bytes

Fixed Size 779416 bytes

Variable Size 128981864 bytes

Database Buffers 289406976 bytes

Redo Buffers 262144 bytes

Database mounted.

Database opened.

SQL> select name from v$controlfile;

NAME

---------------------------------------

+RAC1/cf1.dbf

+RAC1/cf2.dbf

After successfully migrating all the data files over to ASM, the old data files are no longer needed and can be removed. Your single-instance database is now running on ASM!

Starting / Stopping the Cluster

All commands in this section will be run from rac1

Stopping the Oracle RAC 10g Environment

The first step is to stop the Oracle instance. When the instance (and related services) is down, then bring down the ASM instance. Finally, shut down the node applications (Virtual IP, GSD, TNS Listener, and ONS).

$ export ORACLE_SID=orcl1

$ emctl stop dbconsole

$ srvctl stop instance -d orcl -i orcl1

$ srvctl stop asm -n rac1

$ srvctl stop nodeapps -n rac1

Starting the Oracle RAC 10g Environment

The first step is to start the node applications (Virtual IP, GSD, TNS Listener, and ONS). When the node applications are successfully started, then bring up the ASM instance. Finally, bring up the Oracle instance (and related services) and the Enterprise Manager Database console.

$ export ORACLE_SID=orcl1

$ srvctl start nodeapps -n rac1

$ srvctl start asm -n rac1

$ srvctl start instance -d orcl -i orcl1

$ emctl start dbconsole

Start/Stop All Instances with SRVCTL

Start/stop all the instances and their enabled services.

$ srvctl start database -d orcl

$ srvctl stop database -d orcl

Verify the RAC Cluster and Database Configuration

The following RAC verification checks should be performed on both Oracle RAC nodes in the cluster. For this article, however, I will only be performing checks from solaris1.

This section provides several srvctl commands and SQL queries to validate you Oracle RAC 10g configuration.

There are 5 node-level tasks defined for srvctl:

- Adding and deleting node-level applications

- Setting and unsetting the environment for node-level applications

- Administering node applications

- Administering ASM instances

- Starting and stopping a group of programs that include virtual IP addresses, listeners, Oracle Notification Services, and Oracle Enterprise manager agents (for maintenance purposes)

Status of all instances and services

$ srvctl status database -d orcl

Instance orcl1 is running on node rac1

Instance orcl2 is running on node rac2

Status of a single instance

$ srvctl status instance -d orcl -i orcl1

Instance orcl1 is running on node rac1

Status of a named service globally across the database

$ srvctl status service -d orcl -s orcltest

Service orcltest is running on instance(s) orcl2, orcl1

Status of node applications on a particular node

$ srvctl status nodeapps -n rac1

VIP is running on node: rac1

GSD is running on node: rac1

Listener is running on node: rac1

ONS daemon is running on node: rac1

Status of an ASM instance

$ srvctl status asm -n rac1

ASM instance +ASM1 is running on node rac1

List all configured databases

$ srvctl config database

orcl

Display configuration for our RAC database

$ srvctl config database -d orcl

rac1 orcl1 /export/home/rac/product/10.2.0/db_1

rac2 orcl2 /export/home/rac/product/10.2.0/db_1

Display the configuration for the ASM instance(s)

$ srvctl config asm -n rac1

+ASM1 /export/home/rac/product/10.2.0/db_1

All running instances in the cluster

SELECT

inst_id,

instance_number inst_no,

instance_name inst_name,

parallel,

status,

database_status db_status,

active_state state,

host_name host

FROM gv$instance

ORDER BY inst_id

INST_ID INST_NO INST_NAME PAR STATUS DB_STATUS STATE HOST

-------- -------- ---------- --- ------- ------------ --------- --------

1 1 orcl1 YES OPEN ACTIVE NORMAL rac1

2 2 orcl2 YES OPEN ACTIVE NORMAL rac2

Cheers!!!

No comments:

Post a Comment